Openxr Unity

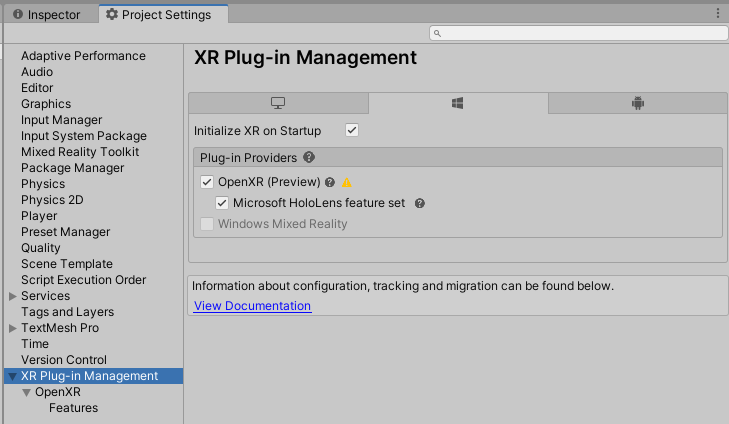

- OpenXR in Unity is only supported on Unity 2020.2 and higher. Currently, it also only supports x64 and ARM64 builds. Follow the Using the Mixed Reality OpenXR Plugin for Unity guide, including the steps for configuring XR Plugin Management and Optimization to install the OpenXR plug-in to your project.

- MSBuild for Unity support. Support for MSBuild for Unity has been removed as of the 2.5.2 release, to align with Unity's new package guidance. Known issues OpenXR. There's currently a known issue with Holographic Remoting and OpenXR, where hand joints aren't consistently available.

Back in 2017, Microsoft joined OpenXR, a standard designed to improve interoperability between the various applications and hardware interfaces used in VR. In early 2019, Microsoft released a preview version of OpenXR runtime for Windows Mixed Reality platform based on the OpenXR draft spec. In July 2019, Microsoft released the first OpenXR 1.0 runtime that supports mixed reality, for all Windows Mixed Reality and HoloLens 2 users.

The two big changes coming in 2021 for Unity XR Toolkit: The New Input System and OpenXR support. Get access to the source code: https://www.patreon.com/Va.

Right now, with OpenXR, you can develop engines and apps that target HoloLens 2 with the same API that you use to target PC VR headsets, including Windows Mixed Reality headsets, Oculus Rift headsets and SteamVR headsets. Since OpenXR-based apps are portable across hardware platforms, Microsoft is stopping the development of legacy WinRT APIs. Existing WinRT API-based apps will continue to work on HoloLens 2 and Windows Mixed Reality, but Microsoft will not add any new features to WinRT APIs.

How to use auto script writer. You can starting developing for OpenXR in Unity and Unreal Engine. Find the details below.

OpenXR in Unity

Today, the supported Unity development path for HoloLens 2, HoloLens (1st gen) and Windows Mixed Reality headsets is Unity 2019 LTS with the existing WinRT API backend. If you’re targeting the new HP Reverb G2 controller in a Unity 2019 app, see our HP Reverb G2 input docs.

Starting with Unity 2020 LTS, Unity will ship an OpenXR backend that supports HoloLens 2 and Windows Mixed Reality headsets. This includes support for the OpenXR extensions that light up the full capabilities of HoloLens 2 and Windows Mixed Reality headsets, including hand/eye tracking, spatial anchors and HP Reverb G2 controllers. A preview version of Unity’s OpenXR package will be available later this year. MRTK-Unity support for OpenXR is currently under development in the mrtk_development branch and will be available alongside that OpenXR preview package.

Starting in Unity 2021, OpenXR will then graduate to be the only supported Unity backend for targeting HoloLens 2 and Windows Mixed Reality headsets.

OpenXR in Unreal Engine

As of Unreal Engine 4.23, full support for HoloLens 2 and Windows Mixed Reality headsets are available through the Windows Mixed Reality (WinRT) plugin.

Unreal Engine 4.23 was also the first major game engine release to ship preview support for OpenXR 1.0! Now in Unreal Engine 4.26, support for HoloLens 2, Windows Mixed Reality and other desktop VR headsets will be available through Unreal Engine’s built-in OpenXR plugin. Unreal Engine 4.26 will also ship with its first set of OpenXR extension plugins enabling hand interaction and HP Reverb G2 controller support, lighting up the full feature set of HoloLens 2 and Windows Mixed Reality headsets. Unreal Engine 4.26 is available in preview today on the Epic Games Launcher, with the official release coming later this year. MRTK-Unreal support for OpenXR will be available alongside that release as well.

If you’re building your own DirectX engine for HoloLens 2, Windows Mixed Reality or other PC VR headsets, Microsoft now recommends OpenXR.

Source: Microsoft

This page details how to use and configure OpenXR input within unity.

For information on how to configure Unity to use OpenXR input, see the Getting Started section of this document.

Overview

Initially, Unity will provide a controller-based approach to interfacing with OpenXR. This will allow existing games and applications that are using the Unity's Input System or the Feature API to continue to use their existing input mechanisms.

The Unity OpenXR package provides a set of controller layouts for various devices that you can bind to your actions when using the Unity Input System. For more information, see the Interaction profile features section.

To use OpenXR Input, you must select the correct interaction profiles features to send to OpenXR. To learn more about OpenXR features in Unity, see the Interaction profile features page.

Future versions of the Unity OpenXR Package will provide further integration with the OpenXR Input system. For smooth upgrading, Unity recommends that you use the device layouts included with the OpenXR package. These have the '(OpenXR)' suffix in the Unity Input System binding window.

Unity will provide documentation on these features when they become available.

Interaction profiles manifest themselves as device layouts in the Unity Input System.

Getting Started

Run the sample

The Open XR package contains a sample named Controller that will help you get started using input in OpenXR. To install the Controller sample, follow these steps:

- Open the Package Manager window (menu: Window > Package Manager).

- Select the OpenXR package in the list.

- Expand the Samples list on the right.

- Click the Import button next to the

Controllersample.

This adds a Samples folder to your project with a Scene named ControllerSample that you can run.

Locking input to the game window

Versions V1.0.0 to V1.1.0 of the Unity Input System only route data to or from XR devices to the Unity Editor while the Editor is in the Game view. To work around this issue, use the Unity OpenXR Project Validator or follow these steps:

- Access the Input System Debugger window (menu: Window > Analysis > Input Debugger).

- In the Options section, enable the Lock Input to the Game Window option.

Unity recommends that you enable the Lock Input to the Game Window option from either the Unity OpenXR Project Validator or the Input System Debugger window

Recommendations

To set up input in your project, follow these recommendations:

- Bind to the OpenXR layouts wherever possible.

- Use specific control bindings over usages.

- Avoid generic 'any controller' bindings if possible (for example, bindings to

<XRController>). - Use action references and avoid inline action definitions.

OpenXR Requires that all bindings be attached only once at application startup. Unity recommends the use of Input Action Assets, and Input Action References to Actions within those assets so that Unity can present those bindings to OpenXR at applications startup.

Using OpenXR input with Unity

Using OpenXR with Unity is the same as configuring any other input device using the Unity Input System:

- Decide on what actions and action maps you want to use to describe your gameplay, experience or menu operations

- Create an

Input ActionAsset, or use the one included with the Sample. - Add the actions and action maps you defined in step 1 in the

Input ActionAsset you decided to use in step 2. Create bindings for each action.

When using OpenXR, you must either create a 'Generic' binding, or use a binding to a device that Unity's OpenXR implementation specifically supports. For a list of these specific devices, see the Interaction bindings section.

Save your

Input ActionAsset.Ensure your actions and action maps are enabled at runtime.

The Sample contains a helper script called

Action Asset Enablerwhich enables every action within anInput ActionAsset. If you want to enable or disable specific actions and action maps, you can manage this process yourself.Write code that reads data from your actions.

For more information, see the Input System package documentation, or consult the Sample to see how it reads input from various actions.

Enable the set of Interaction Features that your application uses.

If you want to receive input from OpenXR, the Interaction Features you enable must contain the devices you've created bindings with. For example, a binding to

<WMRSpatialController>{leftHand}/triggerrequires the Microsoft Motion Controller feature to be enabled in order for that binding to receive input. For more information on Interaction Features, see the Interaction profile features section.Run your application!

You can use the Unity Input System Debugger (menu: Window > Analysis > Input Debugger) to troubleshoot any problems with input and actions.The Input System Debugger can be found under Window > Analysis > Input Debugger

Detailed information

Unity Input System

Unity requires the use of the Input System package when using OpenXR. Unity automatically installs this package when you install Unity OpenXR Support. For more information, see the Input System package documentation.

Interaction Profile Features

Each Interaction Profile Feature contains both the device layout for creating bindings in the Unity Input System and a set of bindings that we send to OpenXR. The OpenXR Runtime will determine which bindings to use based on the set of Interaction Profiles that we send to it.

Unity Recommends that Developers select only the Interaction Profiles that they are able to test their experience with.

Selecting an Interaction Profile from the features menu will add that device to the bindable devices in the Unity Input System. They will be selectable from under the XR Controller section of the binding options.

Mapping between OpenXR paths and Unity bindings

The OpenXR specification details a number of Interaction Profiles that you can use to suggest bindings to the OpenXR runtime. Unity uses its own existing XRSDK naming scheme to identify controls and devices and map OpenXR action data to them.

The table below outlines the common mappings between OpenXR paths and Unity XRSDK Control names.Which controls are available on which devices is covered in the specific device documentation.

| OpenXR Path | Unity Control Name | Type |

|---|---|---|

/input/system/click | system | Boolean |

/input/system/touch | systemTouched | Boolean |

/input/select/click | select | Boolean |

/input/menu/click | menu | Boolean |

/input/squeeze/value | grip | Float |

/input/squeeze/click | gripPressed | Boolean |

/input/squeeze/force | gripForce | Boolean |

/input/trigger/value | trigger | Float |

/input/trigger/squeeze | triggerPressed | Boolean |

/input/trigger/touch | triggerTouched | Boolean |

/input/thumbstick | joystick | Vector2 |

/input/thumbstick/touch | joystickTouched | Vector2 |

/input/thumbstick/clicked | joystickClicked | Vector2 |

/input/trackpad | touchpad | Vector2 |

/input/trackpad/touch | touchpadTouched | Boolean |

/input/trackpad/clicked | touchpadClicked | Boolean |

/input/a/click | primaryButton | Boolean |

/input/a/touch | primaryTouched | Boolean |

/input/b/click | secondaryButton | Boolean |

/input/b/touch | secondaryTouched | Boolean |

/input/x/click | primaryButton | Boolean |

/input/x/touch | primaryTouched | Boolean |

/input/y/click | secondaryButton | Boolean |

/input/y/touch | secondaryTouched | Boolean |

the Unity control touchpad and trackpad are used interchangably, as are joystick and thumbstick.

Pose data

Unity expresses Pose data as individual elements (for example, position, rotation, velocity, and so on). OpenXR expresses poses as a group of data. Unity has introduced a new type to the Input System called a Pose that is used to represent OpenXR poses. The available poses and their OpenXR paths are listed below:

| Pose Mapping | |

|---|---|

/input/grip/pose | devicePose |

/input/aim/pose | pointerPose |

For backwards compatibility, the existing individual controls will continue to be supported when using OpenXR. The mapping between OpenXR pose data and Unity Input System pose data is found below.

| Pose | Pose Element | Binding | Type |

|---|---|---|---|

/input/grip/pose | position | devicePosition | Vector3 |

/input/grip/pose | orientation | deviceRotation | Quaternion |

/input/aim/pose | position | pointerPosition | Vector3 |

/input/aim/pose | orientation | pointerRotation | Quaternion |

HMD bindings

To read HMD data from OpenXR, use the existing HMD bindings available in the Unity Input System. Unity recommends binding the centerEye action of the XR HMD device for HMD tracking. The following image shows the use of centerEye bindings with the Tracked Pose Driver.

OpenXR HMD Data contains the following elements.

- Center Eye

- Device

- Left Eye

- Right Eye

All of the elements expose the following controls:

- position

- rotation

- velocity

- angularVelocity

Openxr Unity Quest

These are exposed in the Unity Input System through the following bindings. These bindings can be found under the XR HMD menu option when binding actions within the Input System.

<XRHMD>/centerEyePosition<XRHMD>/centerEyeRotation<XRHMD>/devicePosition<XRHMD>/deviceRotation<XRHMD>/leftEyePosition<XRHMD>/leftEyeRotation<XRHMD>/rightEyePosition<XRHMD>/rightEyePosition

When using OpenXR the centerEye and device values are identical.

The HMD position reported by Unity when using OpenXR is calculated from the currently selected Tracking Origin space within OpenXR.

The Unity Device Tracking Origin is mapped to Local Space.The Unity Floor Tracking Origin is mapped to Stage Space.

By default, Unity attempts to attach the Stage Space where possible. To help manage the different tracking origins, use the XR Rig from the XR Interaction Package, or the Camera Offset component from the Legacy Input Helpers package.

Interaction bindings

If you use OpenXR input with controllers or interactions such as eye gaze, Unity recommends that you use bindings from the Device Layouts available with the Unity OpenXR package. The Unity OpenXR package provides the following Layouts via features:

| Device | Layout | Feature |

|---|---|---|

| Generic XR controller | <XRController> | n/a |

| Generic XR controller w/ rumble support | <XRControllerWithRumble> | n/a |

| Windows Mixed Reality controller | <WMRSpatialController> | MicrosoftMotionControllerProfile |

| Oculus Touch (Quest,Rift) | <OculusTouchController> | OculusTouchControllerProfile |

| HTC Vive controller | <ViveController> | HTC Vive Controller Profile |

| Valve Index controller | <ValveIndexController> | ValveIndexControllerProfile |

| Khronos Simple Controller | <KHRSimpleController> | KHRSimpleControllerProfile |

| Eye Gaze Interaction | <EyeGaze> | EyeGazeInteraction |

| Microsoft Hand Interaction | <HololensHand> | MicrosoftHandInteraction |

Debugging

For more information on debugging OpenXR input, see the Input System Debugging documentation.

Future plans

Xr Plugin Unity

Looking ahead, we will work towards allowing Unity users to leverage more functionality of OpenXR's input stack, allowing the runtime to bind Unity Actions to OpenXR Actions. This will allow OpenXR Runtimes to perform much more complex binding scenarios than currently possible.